Implementing API Pagination with Node.js,Express and Mongoose. An Example with 1 million records.

Implementing API Pagination with Node.js,Express and Mongoose. An Example with 1 million records.

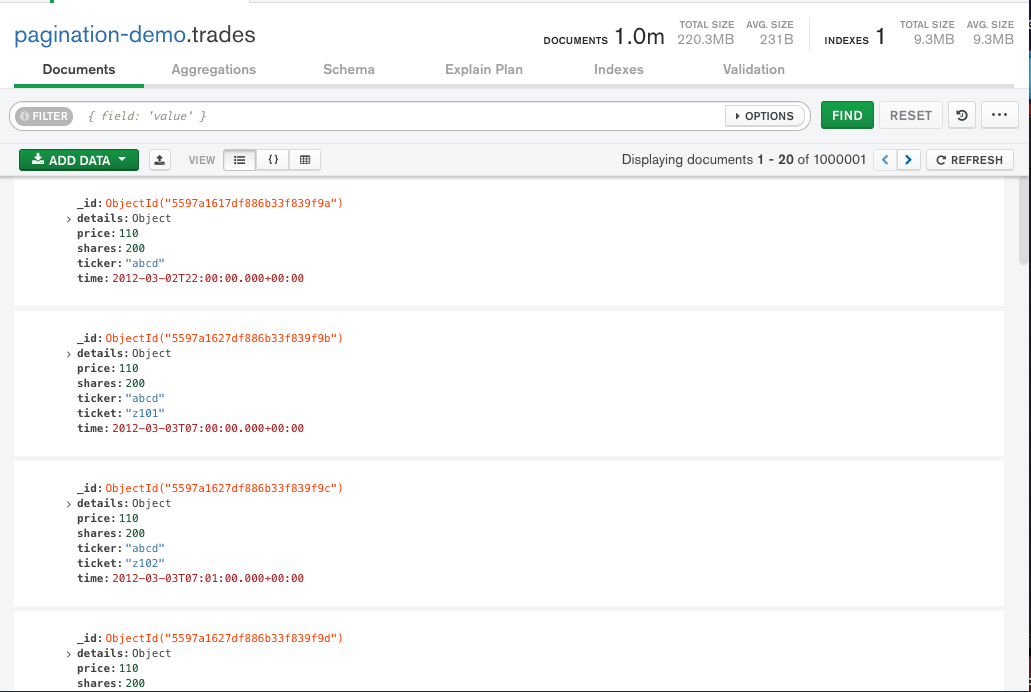

This article Implements API pagination with nodejs, mongoose. An example with 1 million records. Consider that your application has a million records and users fetching the data. Can the server handle a million DB records in a single GET request?. How can you handle GET requests if you have a million records in your database?.

Well, that’s where pagination comes to the rescue. Here we will replicate the production scenario, such as handling a million records and see how to implement different pagination methods. Let’s implement API pagination with nodejs, mongoose

Recommended Nodejs Course

Implementing pagination with nodejs mongoose

Types of pagination

In general, There are two types of pagination. they are,

- Offset based pagination

- Cursor based pagination

Offset based pagination

It is one of the most common pagination methods we are using for decades. It simply uses limit and offset in SQL queries to paginate the data from database.

In NOSQL database, it will be limit and skip

SELECT * FROM users

ORDER BY timestamp

OFFSET 10

LIMIT 5Complete source code is available in the GitHub repo

Let’s implement it in our application and see the advantages/disadvantages of it. Implementation for offset pagination is straightforward,

const fetchCompanies = async (req, res) => {

try {

const limit = parseInt(req.query.limit);

const offset = parseInt(req.query.skip);

const tradesCollection = await Trades.find().skip(offset).limit(limit);

const tradesCollectionCount = await Trades.count();

const totalPages = Math.ceil(tradesCollectionCount / limit);

const currentPage = Math.ceil(tradesCollectionCount % offset);

res.status(200).send({

data: tradesCollection,

paging: {

total: tradesCollectionCount,

page: currentPage,

pages: totalPages,

},

});

} catch (e) {

console.log("Error", e);

res.status(500).send({

data: null,

});

}

};An important line here is,

const tradesCollection = await Trades.find().skip(offset).limit(limit);MongoDB has skip and limit operators to implement offset based pagination. On sending the response, it is recommended to send them along with pagination data.

res.status(200).send({

data: tradesCollection,

paging: {

total: tradesCollectionCount,

page: currentPage,

pages: totalPages,

},

});Demo

Drawbacks of Offset based pagination

- Offset pagination doesn’t scale for large datasets. Using SQL

offsetor NOSQLskipoperators. It scans the record one by one and skip or offset it. If your database has a million records, just like we see in this tutorial, offset-based pagination can affect scalability. - If you have real-time data, offset-based pagination will be unreliable and problematic. There will be skipping of data or duplicate data. Read more

Cursor based pagination

Cursor-based pagination uses a unique record as a cursor for the fetch. When we pass a cursor and limit, it gets all the data that are less than the cursor value along with the limit. Implementing cursor based pagination with nodejs,mongoose

The important thing here is the cursor value should be sequential or timestamps. In that way, we can use comparison operators to fetch the data.

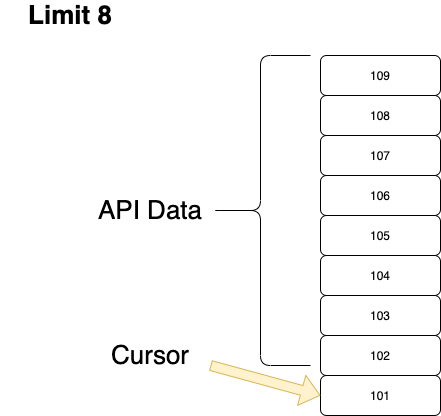

Before getting into the coding part of it, let’s do a simple walk-through on cursor-based pagination. Let’s say the limit is 8, and the user is making a request.

When it comes for the first time, there will be no cursor value, and it fetches the most recent value.

Note: Here, we use time as a cursor value which is in descending order.

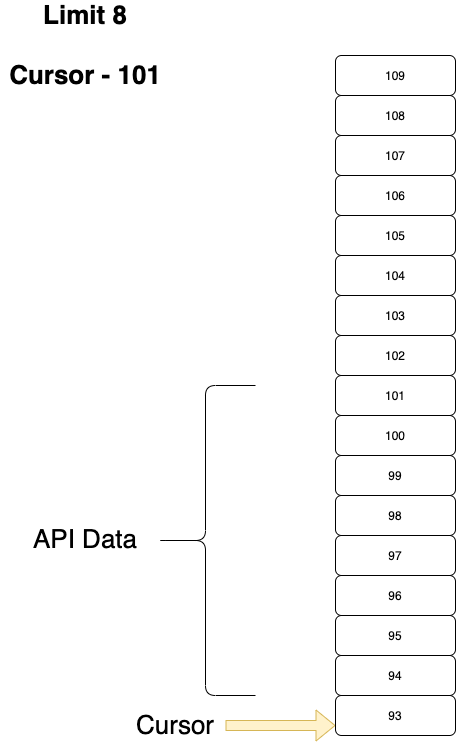

DB call fetches 8+1 value from DB because we need the 9th value as a cursor for the next fetch. Then, we can send the cursor value along with the next request. we need to compare that cursor value and fetch data that are less than the cursor

Let’s see the implementation of cursor-based pagination.

const limit = parseInt(req.query.limit);

const cursor = req.query.cursor;

let decryptedCursor;

let tradesCollection;

if (cursor) {

decryptedCursor = decrypt(cursor);

let decrypedDate = new Date(decryptedCursor * 1000);

tradesCollection = await Trades.find({

time: {

$lt: new Date(decrypedDate),

},

})

.sort({ time: -1 })

.limit(limit + 1)

.exec();

} else {

tradesCollection = await Trades.find({})

.sort({ time: -1 })

.limit(limit + 1);

}

const hasMore = tradesCollection.length === limit + 1;

let nextCursor = null;

if (hasMore) {

const nextCursorRecord = tradesCollection[limit];

var unixTimestamp = Math.floor(nextCursorRecord.time.getTime() / 1000);

nextCursor = encrypt(unixTimestamp.toString());

tradesCollection.pop();

}

res.status(200).send({

data: tradesCollection,

paging: {

hasMore,

nextCursor,

},

});Here, we check if it’s the first request or not based on the cursor value. If the request has a cursor as a query param, we fetch the data based on that.

Note : We encrypt the cursor value for security purpose. it’s recommended to encrypt the cursor value before sending them as response(for security)

If it has the cursor, we use it in our DB query with a comparison operator,

tradesCollection = await Trades.find({

time: {

$lt: new Date(decrypedDate),

},

})

.sort({ time: -1 })

.limit(limit + 1)

.exec();Then, we can find if our database has more data based on the following condition.

const hasMore = tradesCollection.length === limit + 1;Since, we fetch limit + 1 data, we can find out that it has more data if our fetched data and limit + 1 are same.

If it has more value, we need to determine the next cursor value. we can do that using,

if (hasMore) {

const nextCursorRecord = tradesCollection[limit];

var unixTimestamp = Math.floor(nextCursorRecord.time.getTime() / 1000);

nextCursor = encrypt(unixTimestamp.toString());

tradesCollection.pop();

}Another critical thing to note here is removing the last element from the fetched data. Because we needed that value to calculate the cursor, it shouldn’t be for the end-user results.

Demo

Further Reading

Evolving API Pagination at Slack