Here’s what makes Apache Kafka so fast - Kafka Series - Part 3

Here’s what makes Apache Kafka so fast - Kafka Series - Part 3

This is Kafka Series Part 3. To Read Previous Blog Posts.

Kafka is a distributed streaming platform which supports high-throughput, highly distributed, fault-tolerant with low-latency delivery of messages.

Low-Latency IO:

Here, we will see how Kafka achieves the low latency message delivery

One of the old traditional ways to achieve low latency in message delivery is through random access memory(RAM). Although this approach makes them fast, the cost of RAM is much more than disk. Such a system is usually costlier to run when you have several 100s GB’s of Data.

Kafka relies on disk for storage and caching. but the problem is, disks are slower than RAM.

It achieves low latency as random access memory(RAM) through Sequential IO.

What is Sequential IO ?

Here is an excellent analogy which explains the Sequential IO in a much simpler way

Let’s understand what makes Kafka So Fast

Zero - Copy Principal.

According to wikipedia,

”Zero-copy” describes computer operations in which the CPU does not perform the task of copying data from one memory area to another. This is frequently used to save CPU cycles and memory bandwidth when transmitting a file over a network.[1]

Confusing. right?

Let’s see this concept with a simple example. Let’s consider a traditional way of the file transfer. when a client requests a file from the static website. Firstly, website static files read from the disk and write the exact same files to the response socket. This is a very inefficient activity though it appears as the CPU is not performing much activity here.

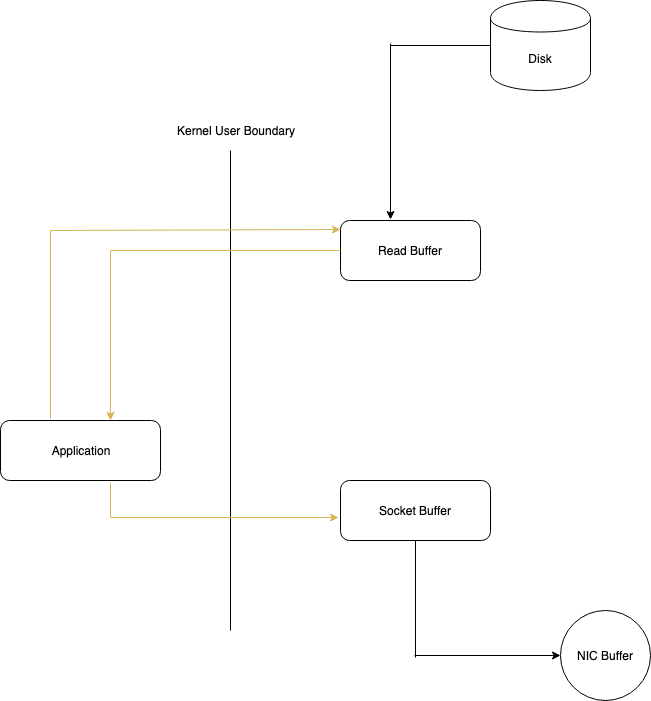

The kernel reads the data off of disk and pushes it across the kernel-user boundary to the application, and then the application pushes it back across the kernel-user boundary to be written out to the socket. In effect, the application serves as an inefficient intermediary that gets the data from the disk file to the socket.

Tradition Data Copying Approach

Every time there is a data transfer beyond the user-boundary kernel, there will be consumption of CPU cycles and memory bandwidth, resulting in a drop in performance especially when the data volumes are huge. This is exactly what the zero-copy principal addresses.

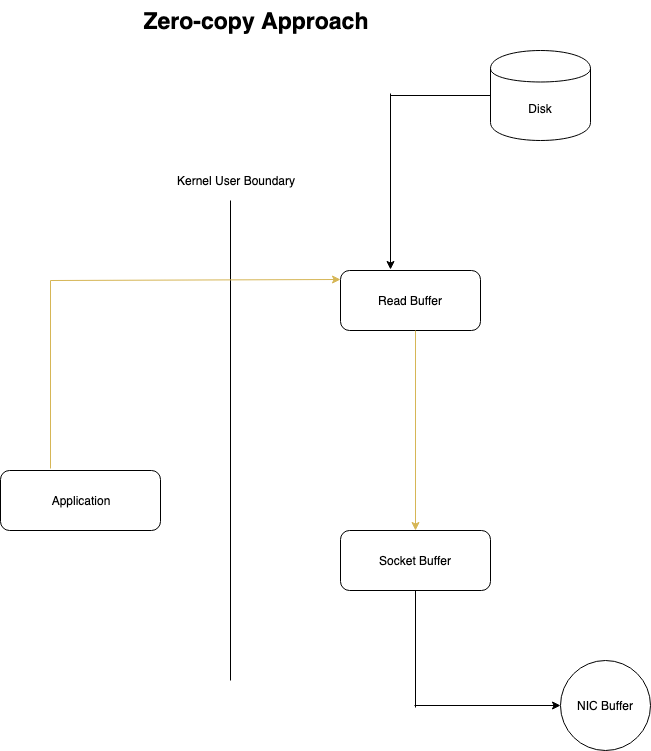

Kafka makes use of this zero-copy principal by requesting the kernel to move the data to sockets rather than moving it via the application.

Zero-copy greatly improves application performance and reduces the number of context switches between kernel and user mode.

Zero-copy Approach.

what else?

Kafka uses many other techniques apart from the ones mentioned above to make systems much faster and efficient:

- Batching of data to reduce network calls, and also converting a lot of random writes into sequential ones.

- Compression of batches (and not individual messages) using LZ4, SNAPPYor GZIP codecs. Much of the data is consistent across messages within a batch (for example, message fields and metadata information). This can lead to better compression ratios.

To Read more about Kafka,